Analytics and Data Science: Stepping up the data processing game.

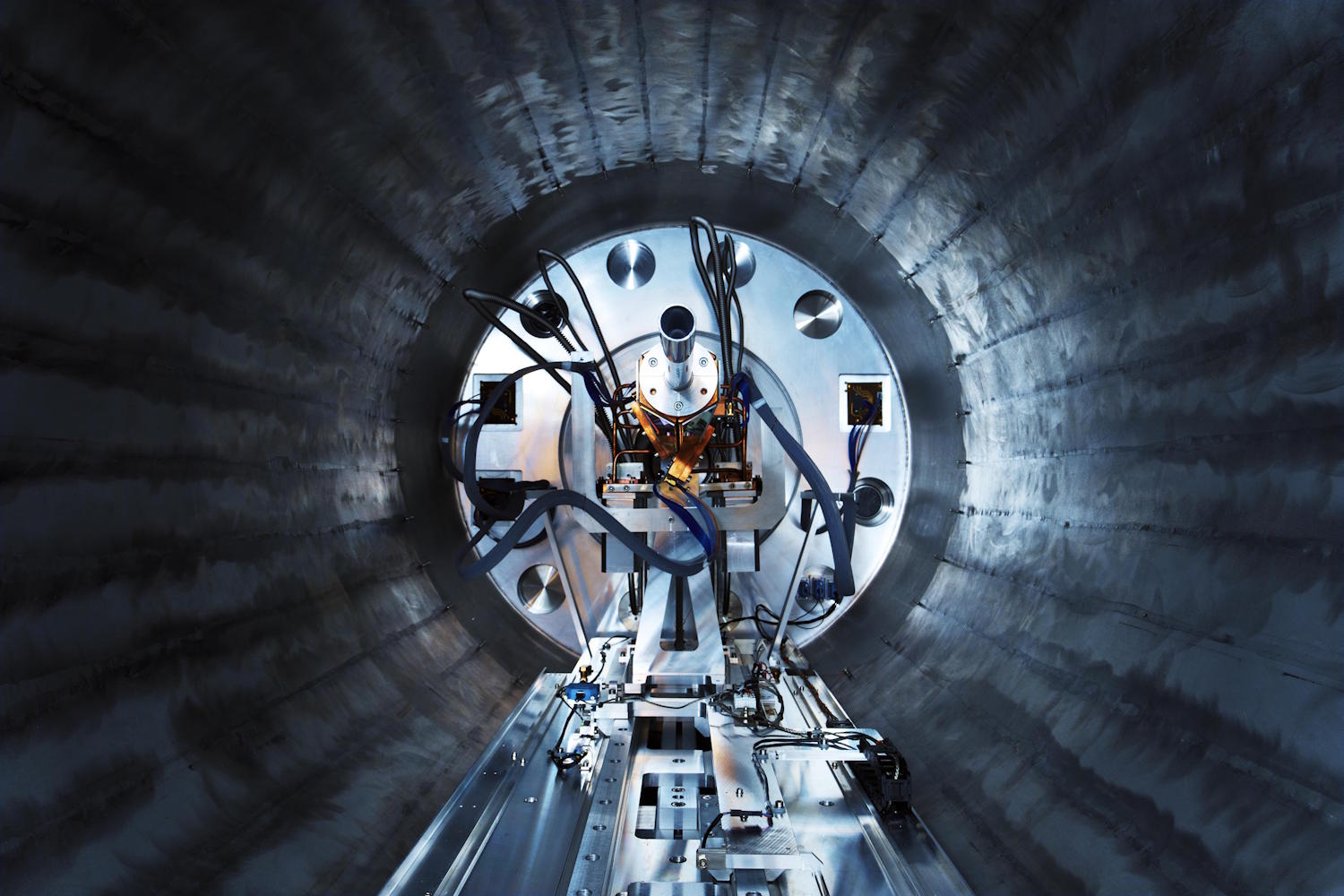

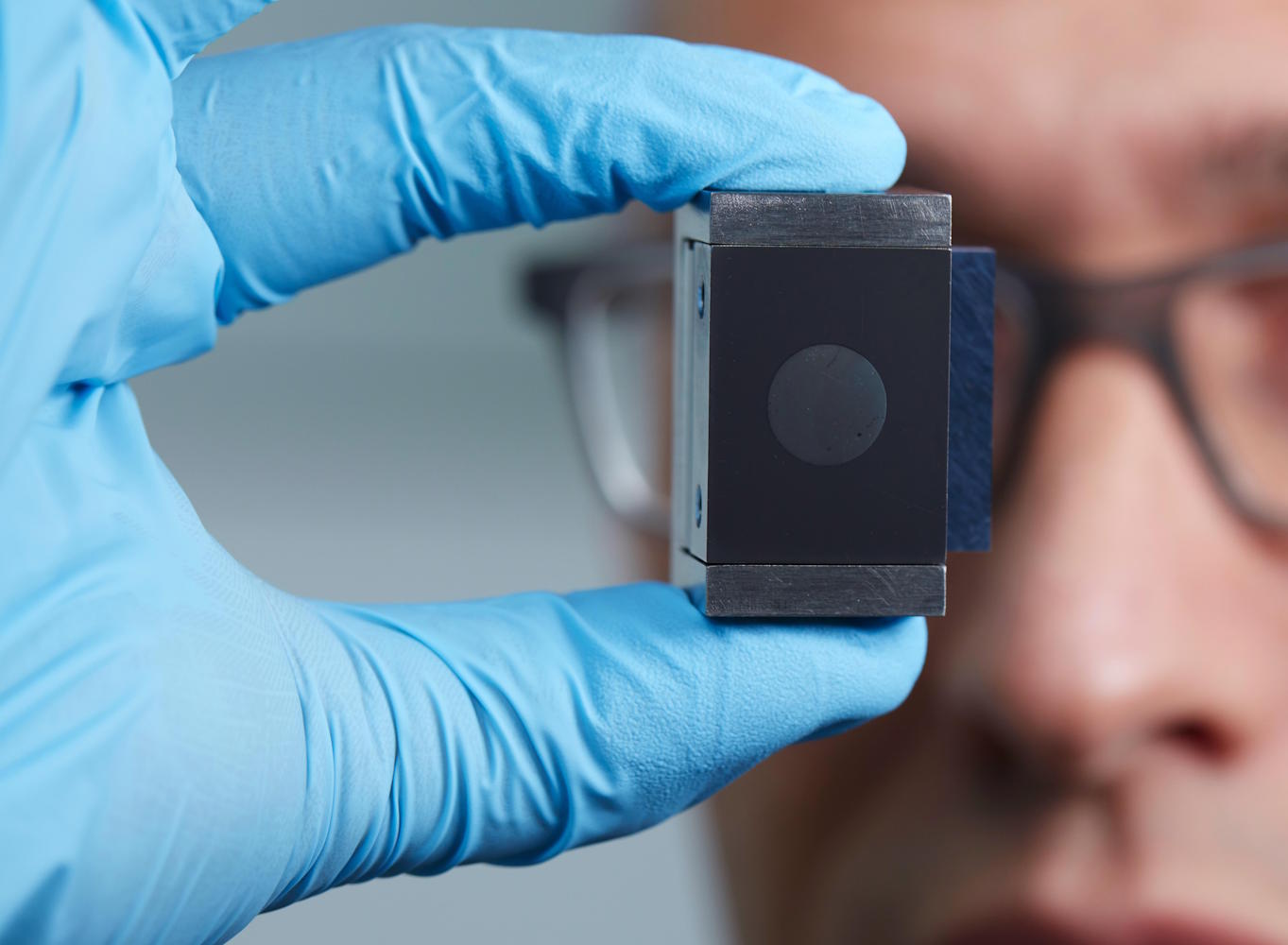

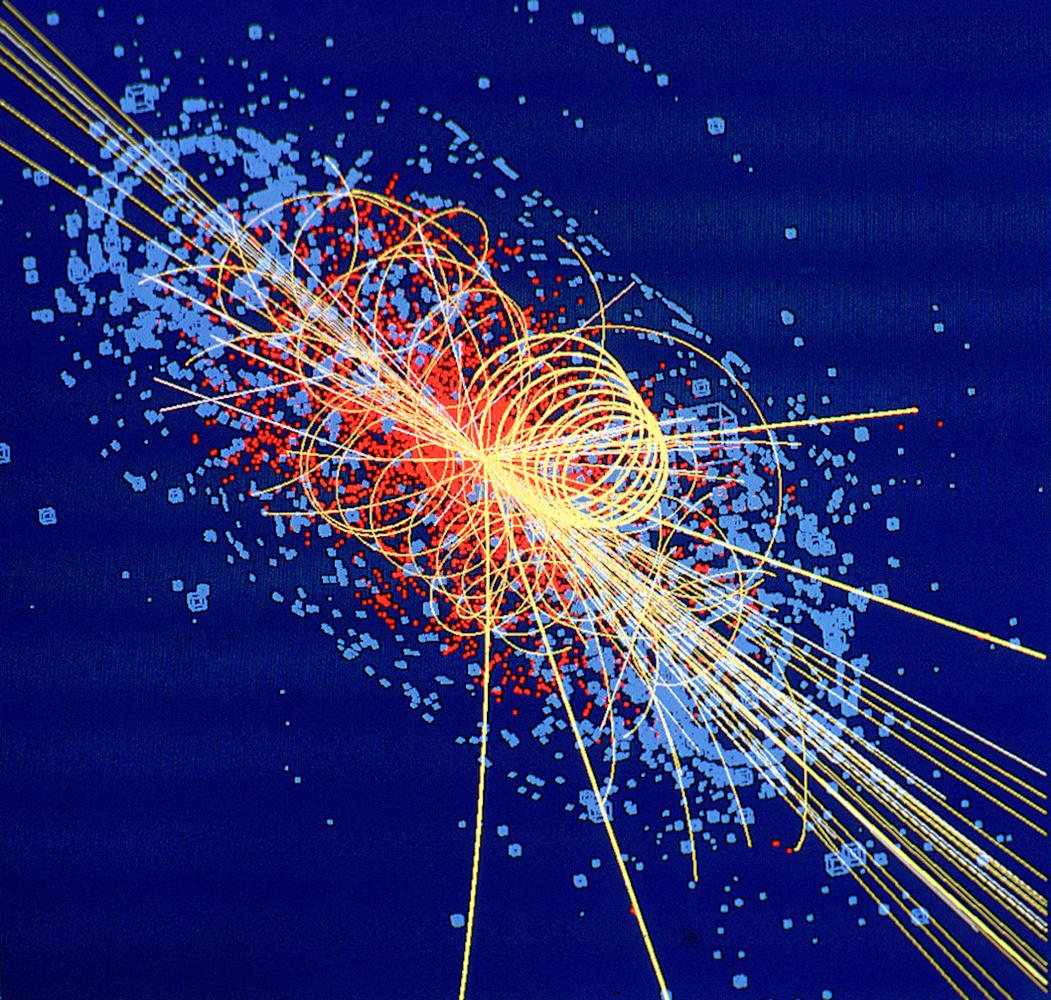

At CERN, Analytics and Data Science is at the heart of processing and interpreting the vast amounts of data generated by the Large Hadron Collider (LHC). This data, produced at an unprecedented scale, is meticulously analysed using sophisticated algorithms and state-of-the-art computational tools. The insights gained not only propel advancements in particle physics but also contribute to innovations across various scientific and engineering domains within the Organization.

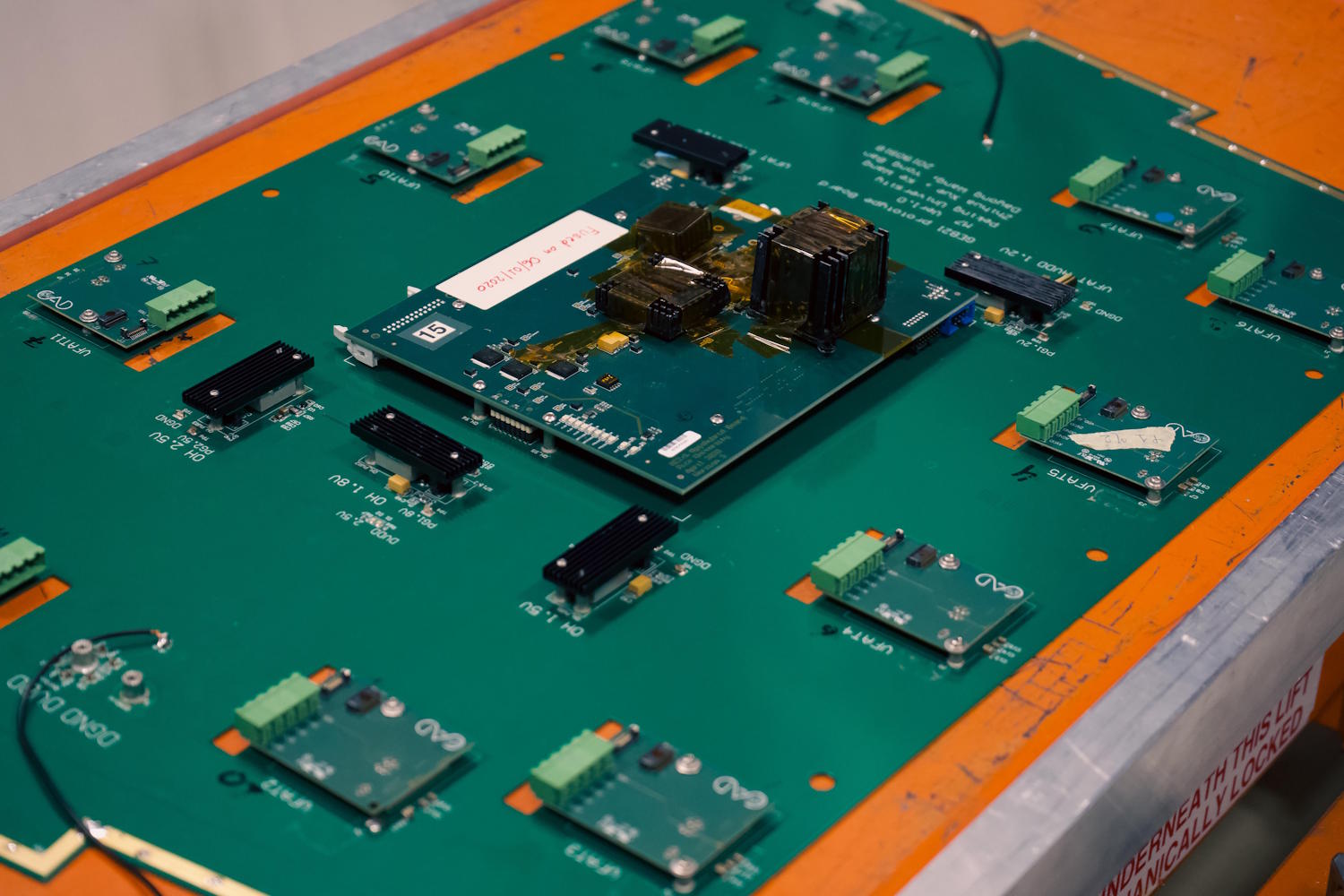

While CERN has traditionally relied on in-house tools for data analysis, the integration of mainstream big data technologies, allowing for more scalable and flexible data management solutions, and impacting other areas like material science, medical research, and more.

The integration of these technologies has transformed the scope and reach of CERN’s Analytics and Data Science efforts. It has facilitated a more collaborative and cross-disciplinary approach, leveraging global expertise and resources. As a result, we are not only pushing the boundaries of fundamental physics but also contributing to technological advancements with broader societal impacts.

Worldwide LHC Computing Grid (WLCG)

- Provide global computing resources for the storage, distribution and analysis of the data generated by the LHC.

- Ensure seamless global collaboration by linking computing resources from over 170 data centres across 42 countries, enabling distributed computing and data access.

- The grid has been instrumental in making groundbreaking discoveries, such as the Higgs boson, by providing the computational power needed to analyse immense volumes of data.

- It has set a precedent for global scientific collaboration, demonstrating the potential of distributed computing in advancing complex scientific research.

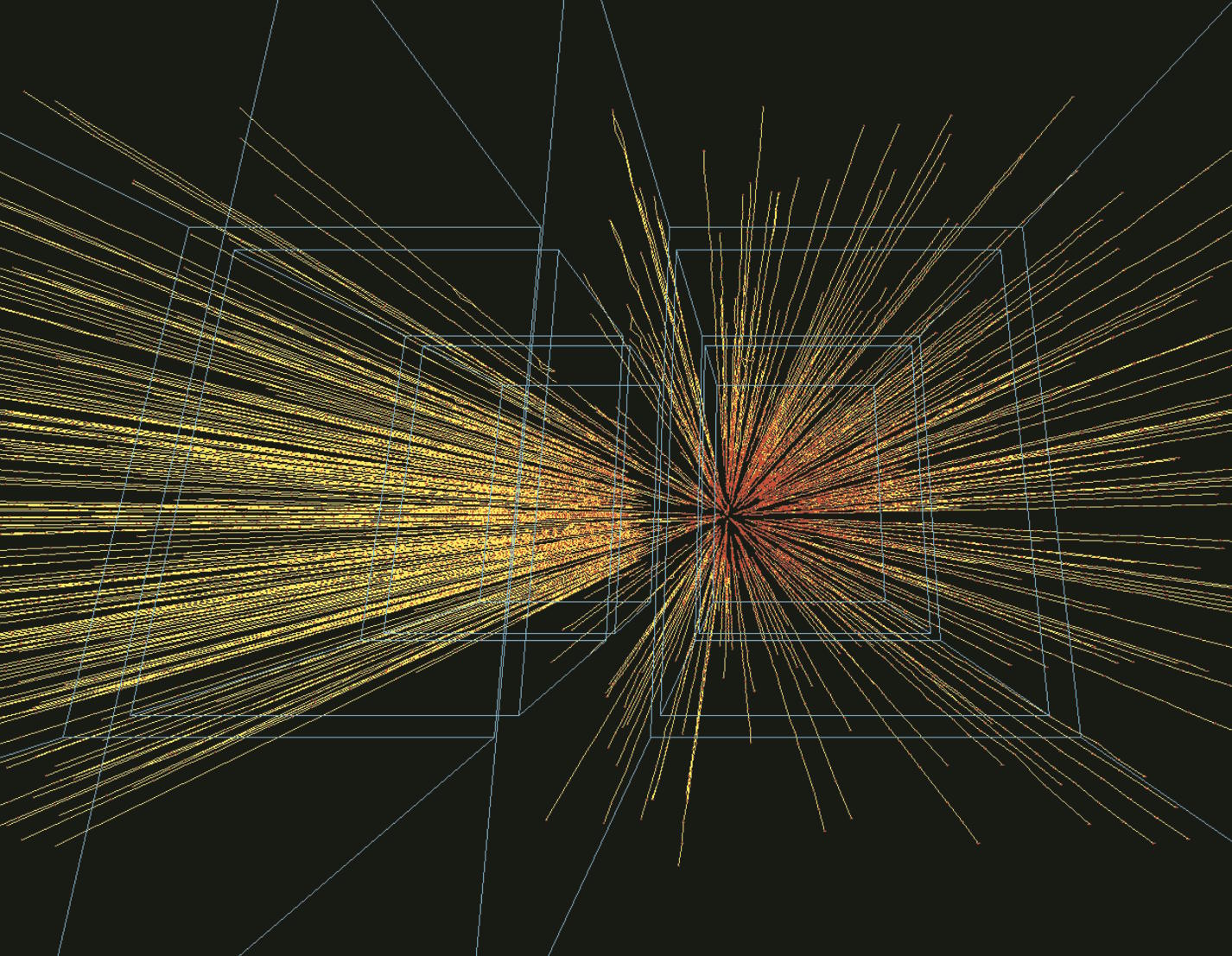

- The grid consists of Tier 0 (CERN), Tier 1 (large data centres), and Tier 2 (smaller centres) computing resources, which work together to manage and process data in a hierarchical structure.

- It utilizes a combination of high-performance computing, cloud services, and data storage technologies to handle petabytes of data efficiently.

- The WLCG has been essential to the success of the LHC, providing the backbone for data analysis and scientific discovery.

- It serves as a model for future distributed computing projects, demonstrating the power of global cooperation and advanced technology in pushing the boundaries of science.